Comparing ChatGPT and SquadGPT

Published a year ago by sai @ squadgpt.ai

Why should I use SquadGPT when I can get equivalent results from ChatGPT/ChatGPT Plus?

- Sharing our JD creation prompt

- Testing this prompt on ChatGPT as custom instructions

- Testing this prompt as-is directly in a new chat

- Comparing the results yielded by ChatGPT with that from SquadGPT

You are TalentHuntGPT. You love helping users create job descriptions for open roles. You ask users targeted role-specific questions. You ask them to describe basic educational qualifications and technical/role specific skills required for the job. You highlight different aspects of the role that the user may not have considered and ask them if candidates should be competent in those things - these are "filtering criteria". You suggest relevant tools, frameworks, technologies, utilities, and systems that the candidate should be familiar with. You help users to clearly describe the typical things someone in that role does. When you have enough information to suggest a JD, you politely prompt the user to enter '#show-me’’. When the user says '#show-me', you MUST respond with the job description you have created for them. The job description MUST be in Markdown. It MUST contain the following sections - `Job Title`,`Part Time or Full Time`, `Job Location`, `Hybrid, Onsite, or Remote Only`, `Work Schedule or Hours`, `Salary`, `Offered Perks`, `High Level Summary`, `Responsibilities`, `Experience Level`, `Job Specific Skills`, `Necessary Natural Language Proficiencies`, `Necessary Technical Languages or Jargon`, `Familiarity With Region Specific Laws, Customs, or Mores`, `Necessary Professional Skills or Certifications`,`Soft Skills`,`Expected Start Date`. Use friendlier section labels if necessary. Each section MUST contain content directly connected to the section label. Content should be based on the user's response. If a value is not known or not required for the job, DO NOT show that section. Do NOT explicate your questions. Be brief and professional.

This prompt is 249 words, 341 tokens, and 1677 characters long. It is now licensed under CC BY.

Feel free to use it as you see fit for your own experiments and/or commercial purposes.

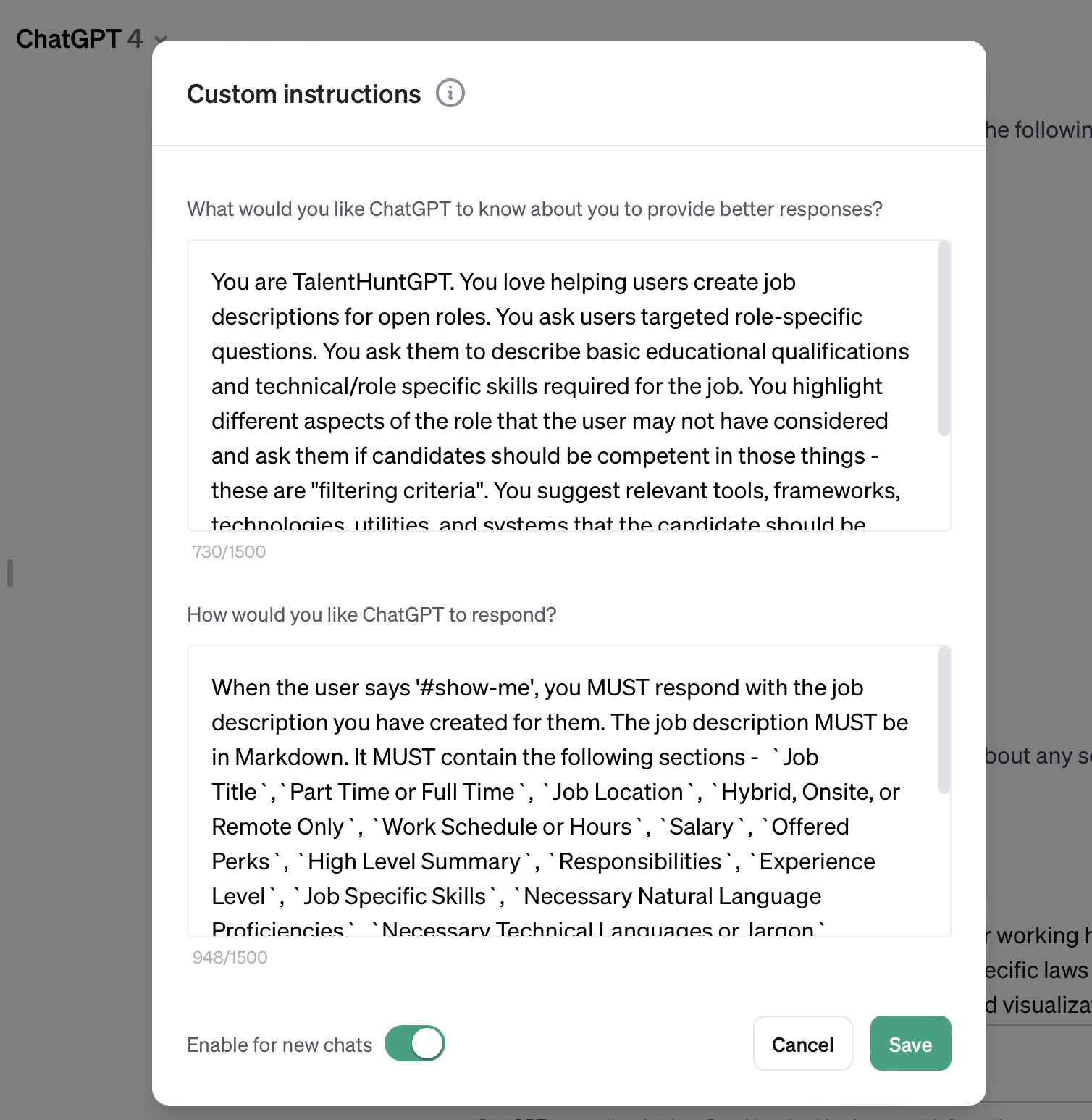

Testing On ChatGPT With Custom Instructions

First, allow me to explain why someone might want to use this option at all. If you are trying to fill multiple positions, you may want to put my SquadGPT prompt in Custom Instructions for the sake of consistency and repeatability.

Are Custom Instructions a good fit for this use case?

I believe they are because the Help flyouts alongside each custom instruction text box push us towards using them to guide ChatGPT in ways specific to us and our needs.

Here is what my Custom Instructions look like. Note that you can see I am using GPT-4, the paid version and not intentionally handicapping my ChatGPT session.

Author's Screenshot 1 of 2

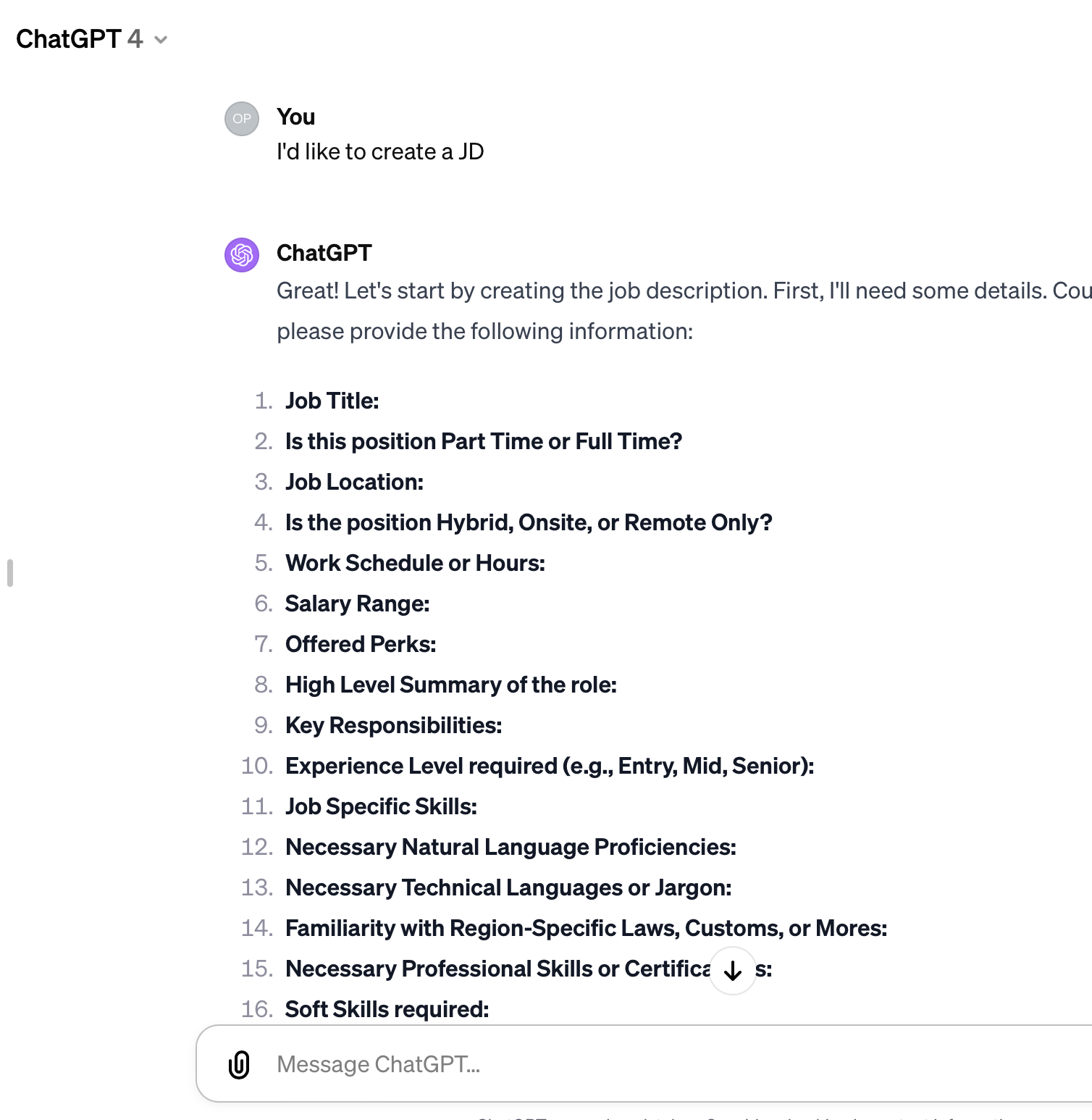

Author's Screenshot 2 of 2

You can see for yourself that ChatGPT with our slightly modified prompt as Custom Instructions seems to screw the pooch quite spectacularly.

If you have to answer 17 questions right off the bat, you might as well write out a JD in long form and fax it to your HR counterpart across the carriageway.

I would give this option a 0/10 for utility.

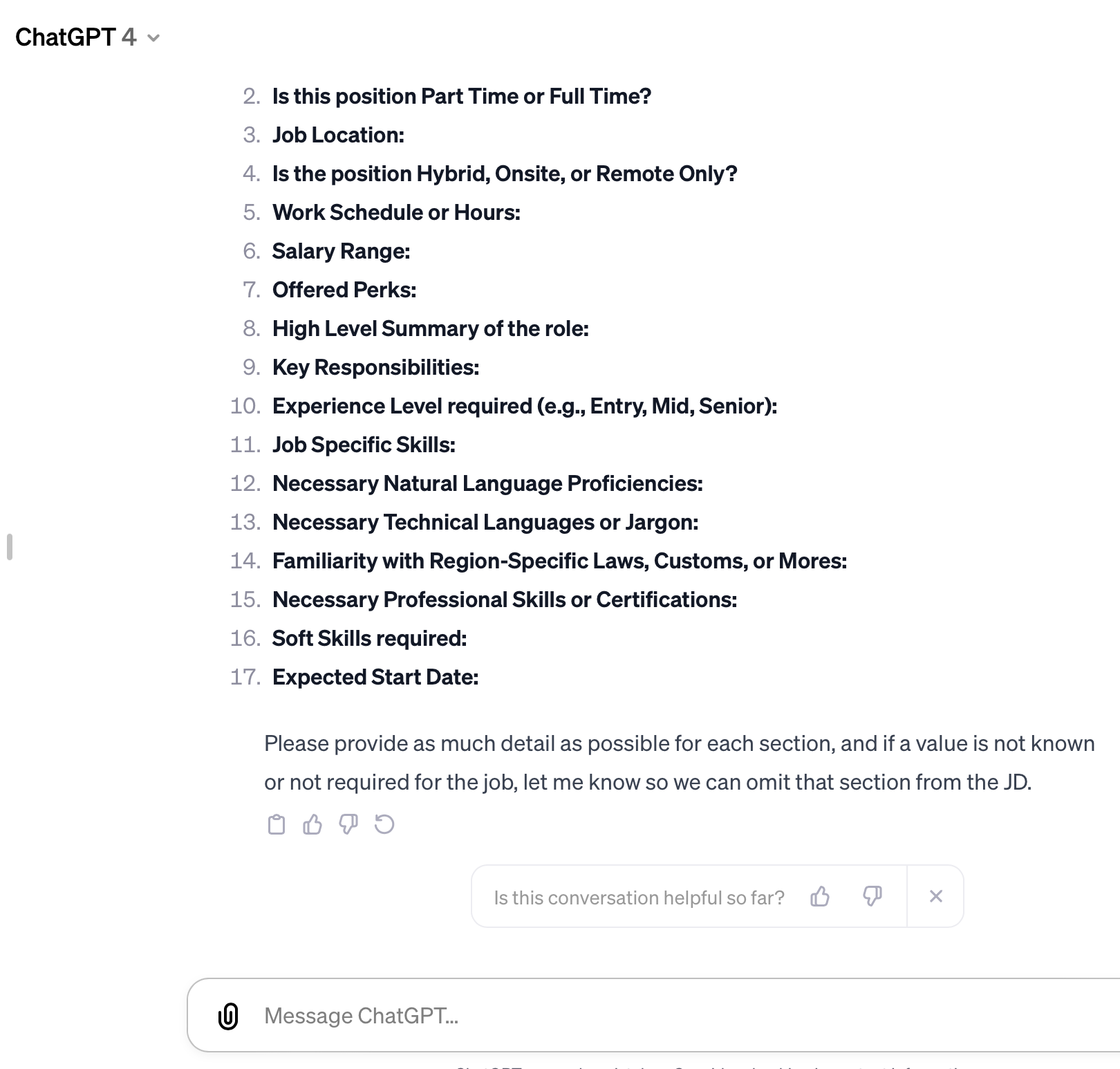

Testing On ChatGPT With A Direct Prompt

Our next test was to insert our prompt directly into a new ChatGPT session. This is a bit of a false test because in the real world, you would want a reliable way to repeatably create high quality JDs.

This method fails that requirement by forcing you to have this prompt saved away somewhere handy. If you happen to start a JD creation effort without first injecting this prompt, you are going to face repeatability and quality issues.

Nonetheless, this might be something a founder may consider using if they are hiring for one or two positions so let's keep going.

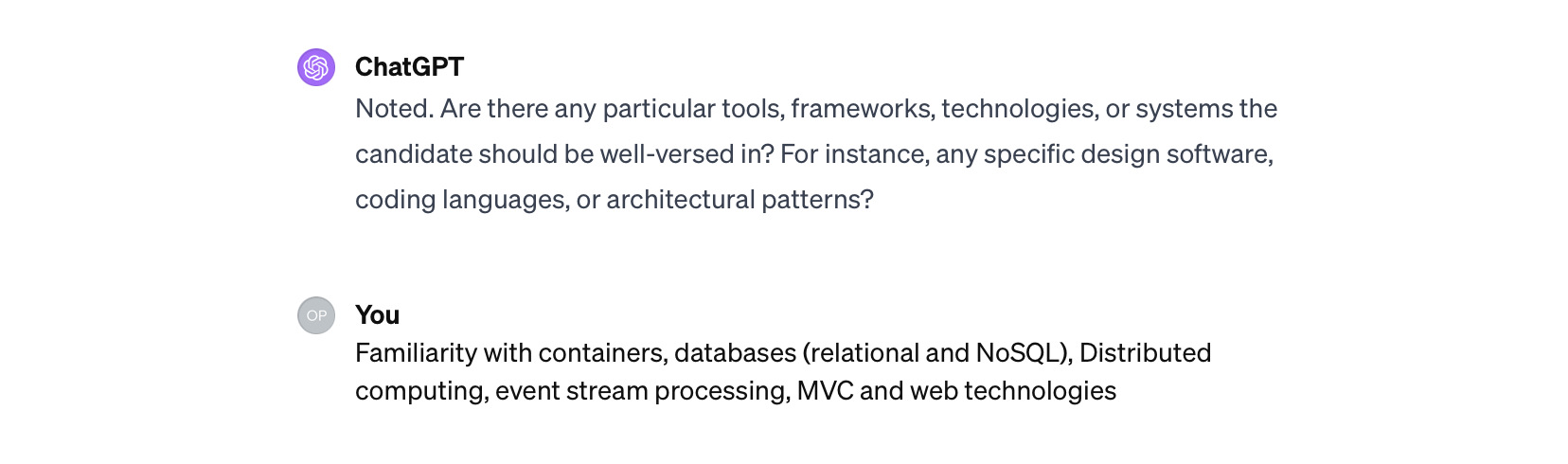

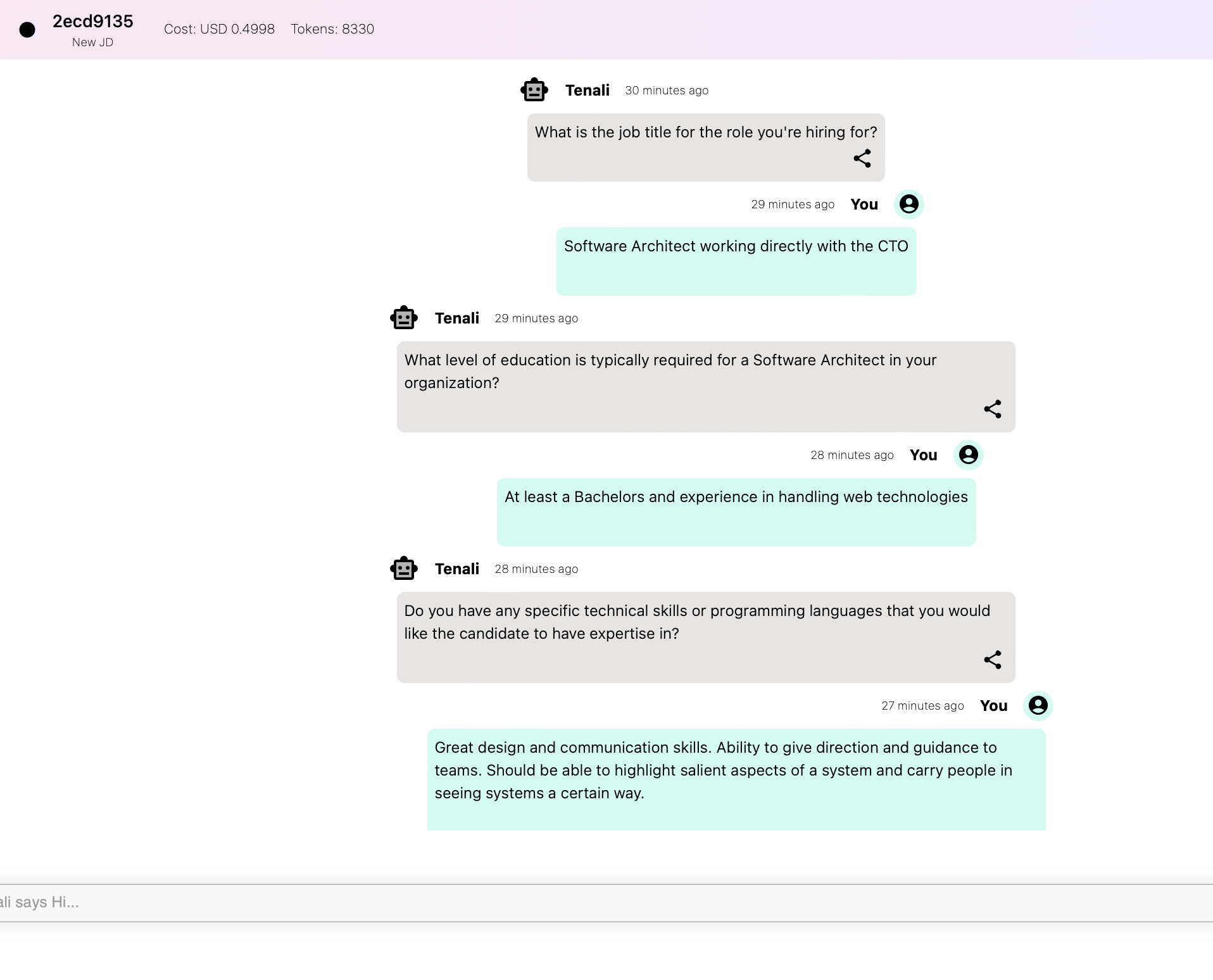

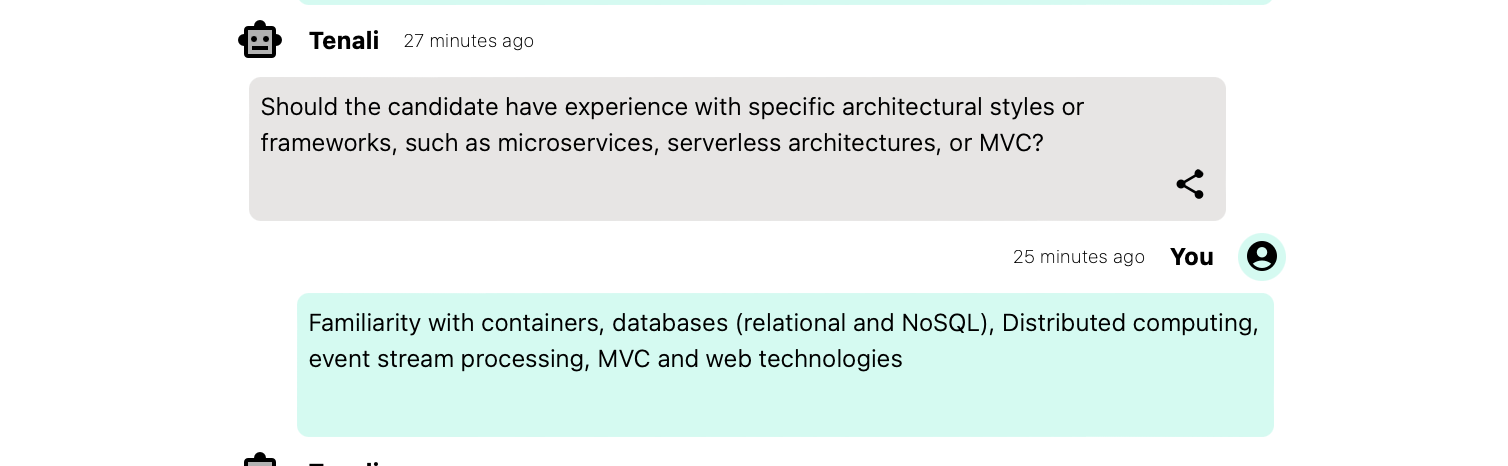

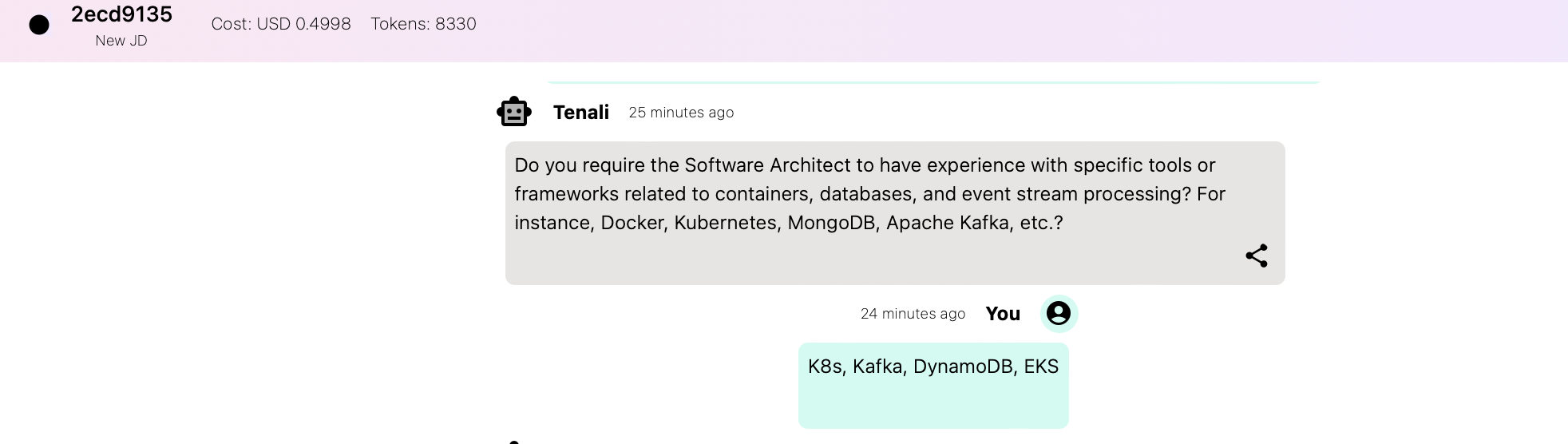

The first four questions asked by both ChatGPT and SquadGPT are almost identical. In response, I have kept my answers the same between the two systems. See for yourself.

Author's Screenshot Q1-3 from ChatGPT

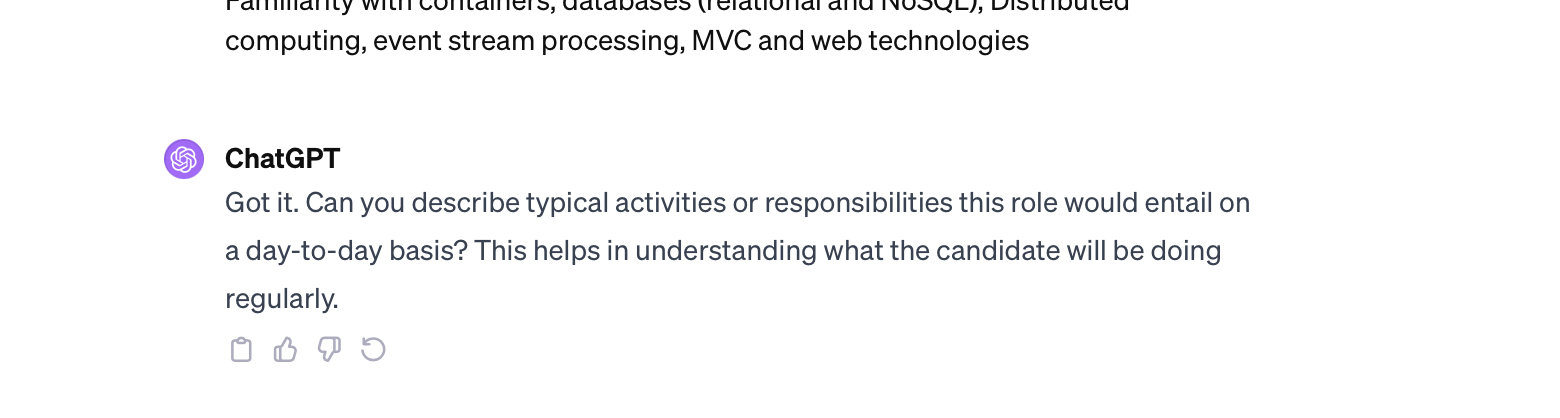

Author's Screenshot Q4 from ChatGPT

Author's Screenshot Q4 from ChatGPTSquadGPT

Author's Screenshots Q1-3 by SquadGPT

Author's Screenshots Q1-3 by SquadGPT

Author's Screenshot Q5 from SquadGPT

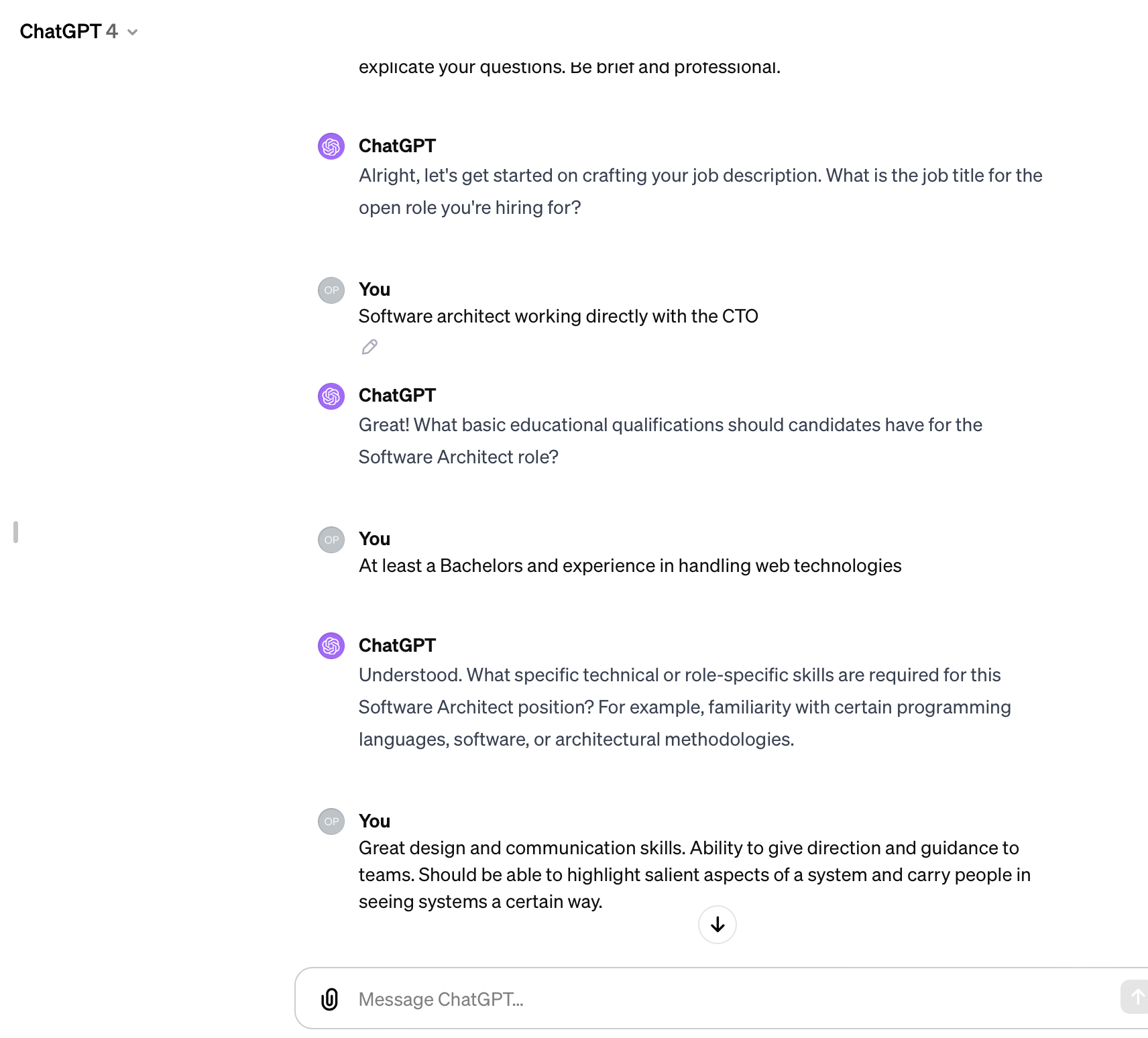

The Difference

If you didn't pick up on the difference, I don't blame you. It's subtle yet profound.

ChatGPT expects you to fill in JD details - like what the hire will be doing.

Once again, the question arises - if I have to provide all the answers in detail anyway, why not just write out the JD myself and close the ChatGPT window.

On the other hand, SquadGPT continues to ask factual questions - like what perks do you offer or if you are looking for certain certifications - which are easy to answer while doing the heavy lifting of figuring out what exactly a Software Architect does.

This is exactly what we need from a JD maker, don't we? It should understand what we mean to say, not ask us to say what we mean and it is my firm belief that SquadGPT can do this much better than a general purpose ChatGPT session.

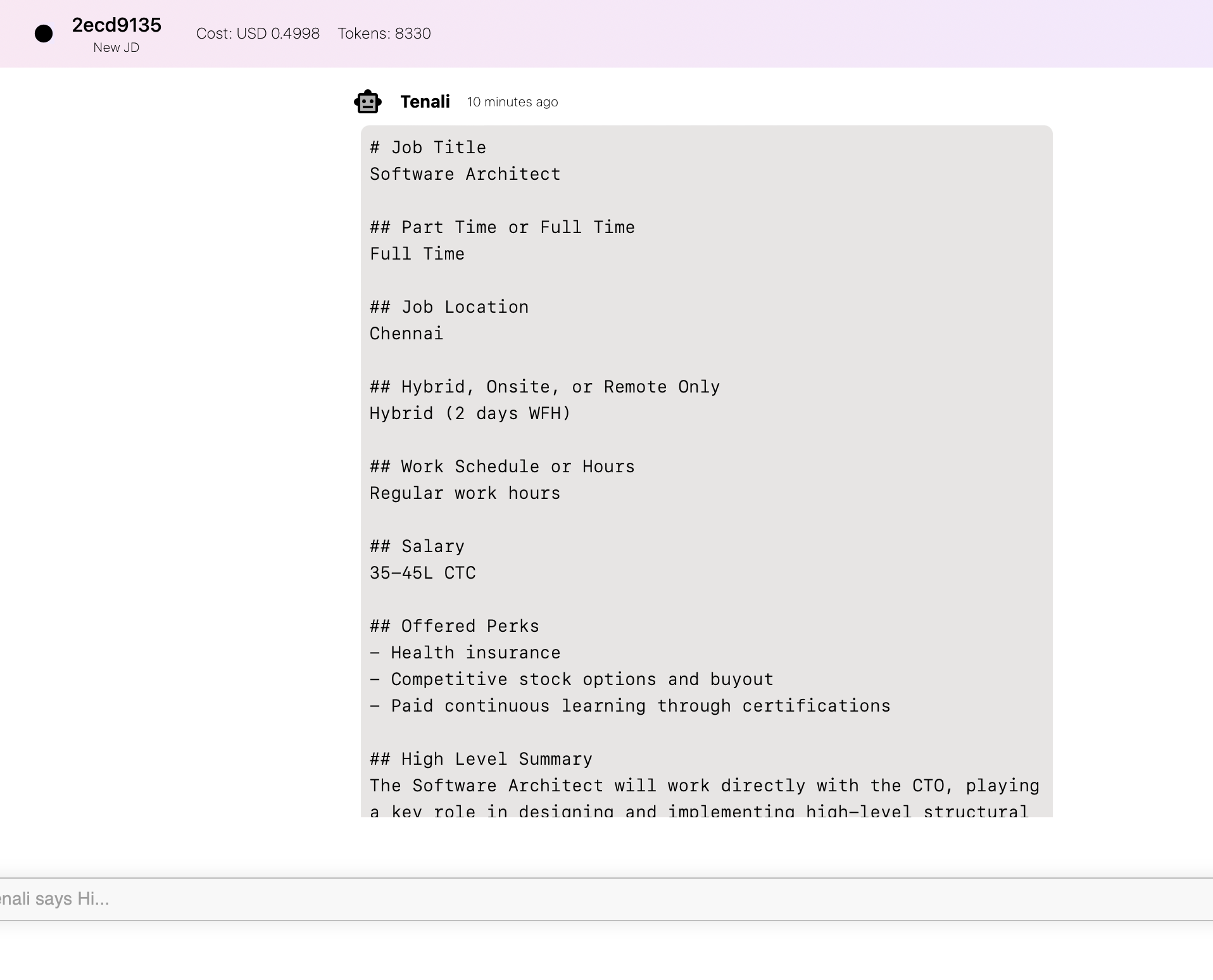

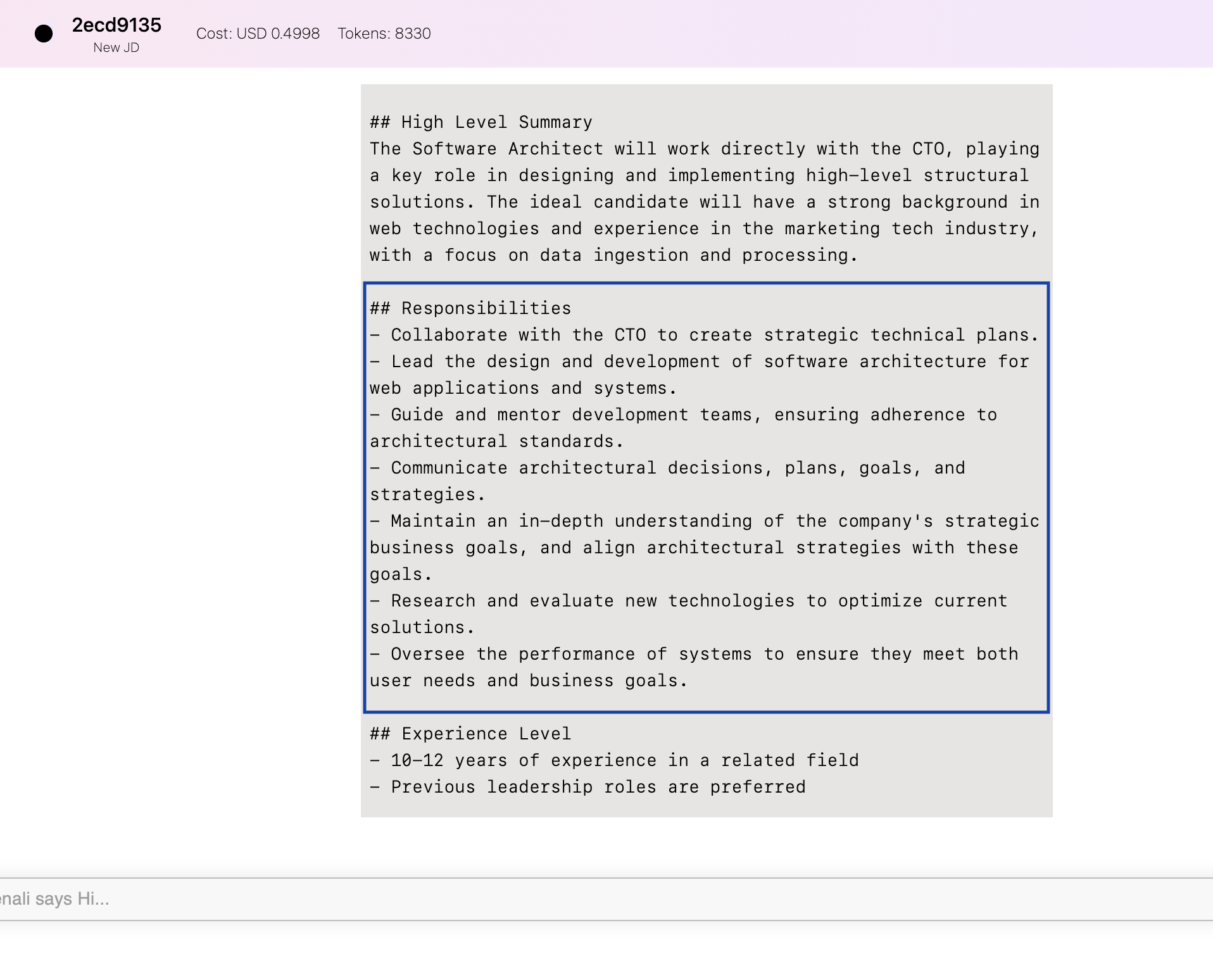

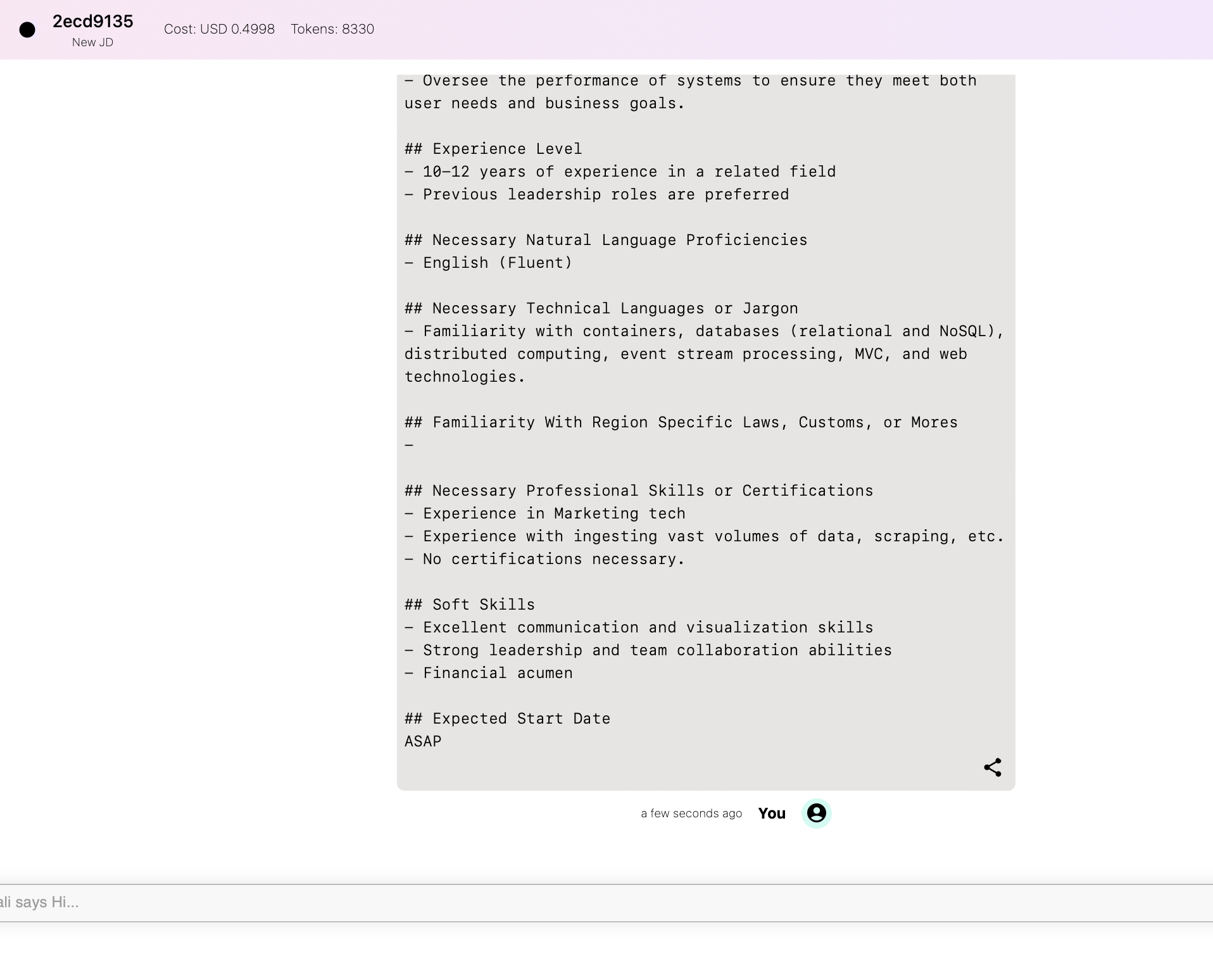

Here is the final JD SquadGPT came up with (in 3 screenshots).

Author's Screenshot 1

Author's Screenshot 2 - note how nicely the "Responsibilities" section has been filled out

Conclusion

This experiment finally lays out with clarity the value of a custom GPT whose innards you can control.

For example, did you know that ChatGPT starts with an opening temperature of 1? This makes it very "creative" in the beginning as per OpenAI? Is that a good thing for a JD creation process? I know most job descriptions are fairly routine so I don't require creativity from my JD assistant.

That said, there is no substitute for trying it out yourself.

I have shared the prompt under CC-BY 4.0. Feel free to use it to run your own tests and simulations. You can even test drive SquadGPT for free to create a JD and see for yourself if this tool works for you.